If you've followed the news lately, you've probably seen the headlines warning you how

red meat increases mortality. The media seems to love bashing on meat these days for some reason, but what about the cold hard facts? Is it really true that meat will increase your chance of dying?

To answer that, we'll have to look at the full paper by

Sinha et al. and see what they had to say. The study included over half a million people, both men and women, which is a pretty impressive number. The participants' lifestyle characteristics (including dietary habits) and deaths from various causes were followed for 10 years. The results led the authors to the following conclusion:

Red and processed meat intakes were associated with modest increases in total mortality, cancer mortality, and cardiovascular disease mortality.

This, in turn, led the media to the conclusion that eating steak might kill you any minute. To see if that's true, a closer look the study and the results is in order.

Red meat and mortality: correlation or causation?

The first problem with epidemiological studies is that there may be other, unknown factors at work which skew the results and obscure the big picture. These factors are known as confounding variables. For example, if people who eat lots of meat get cancer more often than those who don't eat meat, one explanation could be that meat causes cancer. However, it could also be the case that people who eat meat simply tend to be overweight, and that obesity is what is causing cancer in these people.

To rule out this possibility, the authors looked at the data to find out the variables that correlate with meat consumption and mortality. Sure enough, there were many such variables. People who ate more red meat and processed meat also smoked more, ate more, weighed more, exercised less and were less educated – all of these factors are known to be associated with increased mortality.

The authors then adjusted for the effects of these variables to see if red meat and processed meat eating alone correlated with mortality. Even though the correlation was now weaker, the positive correlation still remained. In other words, regardless of whether the people were overweight, smoked, or exercised, eating red meat and processed meat still seemed to increase their risk of dying.

So does this mean that red meat and processed meat eating causes death? Maybe. The results certainly don't rule out the possibility of causation, but they also doesn't prove it. The confounding variables that the authors adjusted for may not be all the confounding variables. They only looked at the variables included in the questionnaire – smoking, exercise, education, etc. – but that's not to say that there couldn't have been other factors at play.

For example, perhaps the meat eaters also ate more processed carbs. The data doesn't say, so there's no way of knowing. But since we already know that meat eaters exercise less, smoke more, and generally live less healthy lives, it's not unreasonable to assume that they might also be the ones who order their steaks with french fries instead of salad.

Red meat, processed meat and white meat: what do they include?

Even if we accept the claim that red meat causes an increase in mortality, there is another big problem with the study that has to do with definitions. What exactly do the terms red meat, processed meat and white meat mean in this context?

Usually, red meat simply means any meat that is red in color when it's raw, whereas white meat is meat that is, well, whitish – or at least not as red as red meat (the difference in color depends on the amount of myoglobin in the muscle). So things like beef and lamb are considered red meat, while pork and chicken are considered white meat. Processed meat is a bit more ambiguous, but it usually means meat preserved by smoking, curing, salting, or by adding preservatives. This category includes foods like bacon, ham and sausages.

Now, if you were to think that these definitions are what the authors used in their study, you'd be sorely mistaken. The red meat category used in the questionnaire included the following items:

All types of beef and pork, including bacon, beef, cold cuts, ham, hamburger, hotdogs, liver, pork, sausage, steak, and meats in foods such as pizza, chili, lasagna, and stew.

White meat was considered to be any of the following:

Chicken, turkey, fish, including poultry cold cuts, chicken mixtures, canned tuna, and low-fat sausages and low-fat hotdogs made from poultry.

Finally, here's the list for processed meat:

Bacon, red meat sausage, poultry sausage, luncheon meats (red and white meat), cold cuts (red and white meat), ham, regular hotdogs and low-fat hotdogs made from poultry.

What does this mean? It means that red meat not only includes beef steaks and pork, but also processed foods like bacon, hotdogs, sausages, and even meat in foods like pizza. So perhaps the problem is not red meat per se, but processed red meat? Again, there's no way to tell based on the data, since the authors didn't make a distinction between the two. However, since processed meat – using the authors' definition – did correlate with increased mortality, this seems like a valid hypothesis.

In addition, since most pizzas have some kind of meat on them, those participants who ate a lot of pizza were likely included in the quintiles eating more meat than those who didn't eat pizza. But if the pizza eaters die younger, is the problem the meat in the pizza or the pizza itself? Should we blame the toppings or the dough? The idea that there could be another culprit to explain the increased mortality sure begins to seem plausible.

If red meat is bad, why is white meat good?

The result that took the authors by surprise is that white meat, unlike it's bad cousin red meat, actually reduced total mortality and cancer mortality. For cardiovascular disease deaths, there was only a slight increase. For death from injuries and sudden death, no association was found.

So is there something in red meat that is lacking in white meat that kills people? One possibility is the higher iron content of red meat, which might be a problem, especially during later age. The theory of mineral accumulation causing aging is certainly interesting, but I would like to see further studies before drawing any conclusions.

The authors could've taken the usual route and shifted some of the blame on saturated fat, but instead, they don't offer any explanation on why red meat and processed meat is bad but white meat is good. My guess is that maybe it's not red meat in general that is the problem here, but processed red meat – foods like hotdogs, bacon, etc.

In fact, I would go so far as to say that the problem might be any processed meat, be it red or white. This would help explain why poultry hotdogs and pork hotdogs were among the foods associated with increased mortality, but unprocessed poultry was not. I assume the only reason processed meat resulted in a seemingly smaller increase in mortality than red meat was that the amount of processed meat by the participants was smaller. Hotdog eaters having less cancer incidences than rare steak eaters would be a truly surprising result.

Thus, perhaps it's less about the color of the meat and more about amount of processing, at least in this study. Cooking alone, for example, causes the formation of advanced glycation end products (AGEs), and the difference in terms of harmful side products between cooking a medium steak and eating a processed hotdog is probably quite big.

Conclusion

All in all, I don't think this study tells us much about the potential risks of eating meat. The main problems with this study are:

1) The possibility of unknown confounding variables that might explain the increased mortality from red meat and processed meat consumption. For example, the amount and type of carbohydrates eaten by the participants was not measured by the questionnaire.

2) The fact that the red meat category included foods such as pork, bacon, sausage, hotdogs, and pizza toppings, i.e. foods not usually considered red meat and also processed meats. Therefore, it is unclear whether the association was due to processed red meat instead of red meat per se.

3) The inverse relationship between white meat and mortality. Those who consumed foods categorized as white meat had less risk of cancer and total mortality. It is not apparent why red meat would increase risk while white meat would decrease it. Again, one possible explanation is that the white meat category included less processed foods than the red meat category.

For more information on diets and health, see the following posts:

Low-Carb vs. Low-Fat: Effects on Weight Loss and Cholesterol in Overweight Men

Intermittent Fasting: Understanding the Hunger Cycle

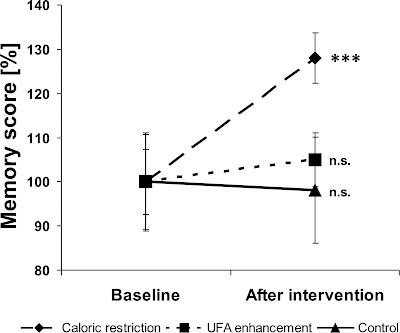

Caloric Restriction Improves Memory in the Elderly

A Typical Paleolithic High-Fat, Low-Carb Meal of an Intermittent Faster

Read More......